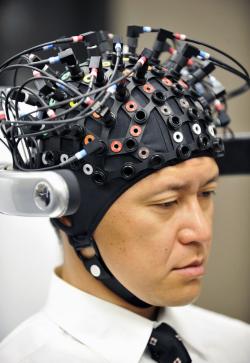

A man wears a brain-machine interface, equipped with electroencephalography

A man wears a brain-machine interface, equipped with electroencephalography Photo by Yoshikazu Tsuno/AFP/Getty Images

Behind a locked door in a white-walled basement in a research building in Tempe, Ariz., a monkey sits stone-still in a chair, eyes locked on a computer screen. From his head protrudes a bundle of wires; from his mouth, a plastic tube. As he stares, a picture of a green cursor on the black screen floats toward the corner of a cube. The monkey is moving it with his mind.

The monkey, a rhesus macaque named Oscar, has electrodes implanted in his motor cortex, detecting electrical impulses that indicate mental activity and translating them to the movement of the ball on the screen. The computer isn?t reading his mind, exactly?Oscar?s own brain is doing a lot of the lifting, adapting itself by trial and error to the delicate task of accurately communicating its intentions to the machine. (When Oscar succeeds in controlling the ball as instructed, the tube in his mouth rewards him with a sip of his favorite beverage, Crystal Light.) It?s not technically telekinesis, either, since that would imply that there?s something paranormal about the process. It?s called a ?brain-computer interface.? And it just might represent the future of the relationship between human and machine.

Stephen Helms Tillery?s laboratory at Arizona State University is one of a growing number where researchers are racing to explore the breathtaking potential of BCIs and a related technology, neuroprosthetics. The promise is irresistible: from restoring sight to the blind, to helping the paralyzed walk again, to allowing people suffering from locked-in syndrome to communicate with the outside world. In the past few years, the pace of progress has been accelerating, delivering dazzling headlines seemingly by the week.

At Duke University in 2008, a monkey named Idoya walked on a treadmill, causing a robot in Japan to do the same. Then Miguel Nicolelis stopped the monkey?s treadmill?and the robotic legs kept walking, controlled by Idoya?s brain. At Andrew Schwartz?s lab at the University of Pittsburgh in December 2012, a quadriplegic woman named Jan Scheuermann learned to feed herself chocolate by mentally manipulating a robotic arm. Just last month, Nicolelis? lab set up what it billed as the first brain-to-brain interface, allowing a rat in North Carolina to make a decision based on sensory data beamed via Internet from the brain of a rat in Brazil.

So far the focus has been on medical applications?restoring standard-issue human functions to people with disabilities. But it?s not hard to imagine the same technologies someday augmenting capacities. If you can make robotic legs walk with your mind, there?s no reason you can?t also make them run faster than any sprinter. If you can control a robotic arm, you can control a robotic crane. If you can play a computer game with your mind, you can, theoretically at least, fly a drone with your mind.

It?s tempting and a bit frightening to imagine that all of this is right around the corner, given how far the field has already come in a short time. Indeed, Nicolelis?the media-savvy scientist behind the ?rat telepathy? experiment?is aiming to build a robotic bodysuit that would allow a paralyzed teen to take the first kick of the 2014 World Cup. Yet the same factor that has made the explosion of progress in neuroprosthetics possible could also make future advances harder to come by: the almost unfathomable complexity of the human brain.

From I, Robot to Skynet, we?ve tended to assume that the machines of the future would be guided by artificial intelligence?that our robots would have minds of their own. Over the decades, researchers have made enormous leaps in AI, and we may be entering an age of ?smart objects? that can learn, adapt to, and even shape our habits and preferences. We have planes that fly themselves, and we?ll soon have cars that do the same. Google has some of the world?s top AI minds working on making our smartphones even smarter, to the point that they can anticipate our needs. But ?smart? is not the same ?sentient.? We can train devices to learn specific behaviors, and even out-think humans in certain constrained settings, like a game of Jeopardy. But we?re still nowhere close to building a machine that can pass the Turing test, the benchmark for human-like intelligence. Some experts doubt we ever will: Nicolelis, for one, argues Ray Kurzweil?s Singularity is impossible because the human mind is not computable.

Philosophy aside, for the time being the smartest machines of all are those that humans can control. The challenge lies in how best to control them. From vacuum tubes to the DOS command line to the Mac to the iPhone, the history of computing has been a progression from lower to higher levels of abstraction. In other words, we?ve been moving from machines that require us to understand and directly manipulate their inner workings to machines that understand how we work and respond readily to our commands. The next step after smartphones may be voice-controlled smart glasses, which can intuit our intentions all the more readily because they see what we see and hear what we hear.

The logical endpoint of this progression would be computers that read our minds, computers we can control without any physical action on our part at all. That sounds impossible. After all, if the human brain is so hard to compute, how can a computer understand what?s going on inside it?

Source: http://feeds.slate.com/click.phdo?i=2ae0b3735d795fdc4cb1fc0b6ecb36cd

the lorax lorax fisker karma super tuesday states shepard fairey is snooki pregnant snooki pregnant

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.